Hey guys,

Last Sunday night we had our cool meetup about ASO and SEO for mobile; thank you all for attending, you can see the photos and videos (in Hebrew) on our Facebook page.

The turn up wasn’t bad, and people were engaged in the theme – which is always a good thing.

The same goes to Google’s indexing of your pages and generally, your content.

The cracking of this mystery always alludes webmasters and the use of Python scripts is against Google’s policy, which prompted this response from Gary Illyes:

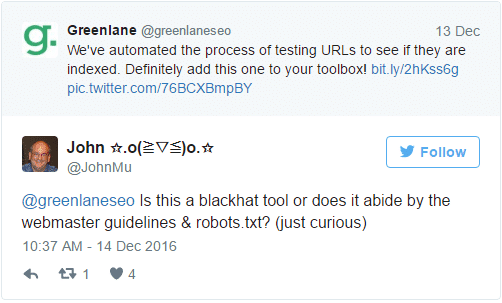

There are other alternatives based on Google sheets such as this one but with similar results that lack real confidence level, as well as prompting responses such as this one:

Ok, but a webmaster should know what is indexed and what is not, shouldn’t one?

This is what ZEFO is for – to collect relevant data from more than one source, ergo provide relevant insights about one’s SEO strategy.

Recently, we have stumbled upon the R Script method which derives information from Google Analytics, and claims compliance with Google’s policy (for the time being):

Courtesy of Mark Edmondson

According to its creator, it checks your site’s XML sitemap to see if there are any discrepancies between it and what is shown on Google analytics, thus deduces that if a URL is not found in analytics for Google organic search results, then it likely hasn’t been indexed by Google.

We have two problems with this method:

It’s quite an unknown language, so people would not be prompted to use it.

It’s bug ridden, so it wouldn’t give you the edge you want to get and would crash more than once.

Ok, what about elimination? That is a rule of thumb and probability wise has been correct at least 80% of the times, right?

You can also just walk to Google Analytics Query Explorer with the data you are looking for and compare notes with other methods to receive a better conclusion and by multiplying probabilities, you could potentially find what has been indexed or not with at least a 90% confidence level which still gives us an alpha of 10%, which is huge but still better than a pareto 80% confidence level, wouldn’t you agree?

All you need to do is download the relevant data in a TSV file format and run it through Excel (yes, in the world of Google sheets we also use Excel – deal with it, Generation Z!).

Be advised that this isn’t a cure for the disease, as your XML sitemap wouldn’t necessarily point out to something not being indexed and large websites face that problem all the time.

In case you do wish to use it, here’s a quick look at the method:

(Courtesy of Search Engine Land)

Last method we checked was the log file method, as it gives us abundance of data, and as information such as bots entering the system is “painted”, it could give us a better indication of whether or not segments of the website were actually indexed.

So this would be binary as it was either indexed by a bot that was crawling the site or not.

Once we use all this information, we can receive a higher confidence level, perhaps even 95%-97% ergo our significance level is super high with a very low alpha, which gives us conclusive data as to whether or not our site has been indexed by Google.

As a one-stop-shop for SEO strategy, we have devised a great self-serve tool which pulls information from all relevant sources, thus gives you a the insights you really are looking for.